Instantiation¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

The RGBDImages structures aims to contain batched frame tensors to more easily pass on to SLAM algorithms. It also supports easy computation of (both local and global) vertex maps and normal maps.

An RGBDImages object can be initialized from rgb images, depth images, instrinsics and (optionally) poses. RGBDImages supports both a channels first and a channels last representation.

[2]:

print(f"colors shape: {colors.shape}") # torch.Size([2, 8, 240, 320, 3])

print(f"depths shape: {depths.shape}") # torch.Size([2, 8, 240, 320, 1])

print(f"intrinsics shape: {intrinsics.shape}") # torch.Size([2, 1, 4, 4])

print(f"poses shape: {poses.shape}") # torch.Size([2, 8, 4, 4])

print('---')

# instantiation without poses

rgbdimages = RGBDImages(colors, depths, intrinsics)

print(rgbdimages.shape) # (2, 8, 240, 320)

print(rgbdimages.poses) # None

print('---')

# instantiation with poses

rgbdimages = RGBDImages(colors, depths, intrinsics, poses)

print(rgbdimages.shape) # (2, 8, 240, 320)

print('---')

colors shape: torch.Size([2, 8, 240, 320, 3])

depths shape: torch.Size([2, 8, 240, 320, 1])

intrinsics shape: torch.Size([2, 1, 4, 4])

poses shape: torch.Size([2, 8, 4, 4])

---

(2, 8, 240, 320)

None

---

(2, 8, 240, 320)

---

Indexing and slicing¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

Basic indexing and slicing of RGBDImages over the first (batch) dimension and the second (sequence length) dimension is supported.

[3]:

# initalize RGBDImages

rgbdimages = RGBDImages(colors, depths, intrinsics, poses)

# indexing

rgbdimages0 = rgbdimages[0, 0]

print(rgbdimages0.shape) # (1, 1, 240, 320)

print('---')

# slicing

rgbdimages1 = rgbdimages[:2, :5]

print(rgbdimages1.shape) # (2, 5, 240, 320)

print('---')

(1, 1, 240, 320)

---

(2, 5, 240, 320)

---

Vertex maps and normal maps¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

This section demonstrates accessing vertex maps and normal maps from RGBDImages. Vertex maps are computed when accessing the RGBDImages.vertex_maps property, and are cached afterwards for additional access without further computation (and similarly with normal maps).

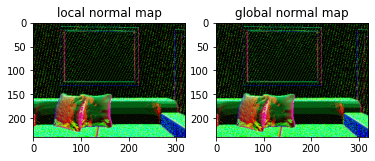

RGBDImages has both a local vertex map property (RGBDImages.vertex_map) which computes vertex positions with respect to each frame, as well as global vertex map (RGBDImages.global_vertex_map) which considers the poses of the RGBDImages object to compute the global vertex positions. A similar story is true for RGBDimages.normal_map and RGBDImages.global_normal_map.

[4]:

# initalize RGBDImages

rgbdimages = RGBDImages(colors, depths, intrinsics, poses)

# compute vertex maps and normal maps

print(rgbdimages.vertex_map.shape) # torch.Size([2, 8, 240, 320, 3])

print(rgbdimages.normal_map.shape) # torch.Size([2, 8, 240, 320, 3])

print(rgbdimages.global_vertex_map.shape) # torch.Size([2, 8, 240, 320, 3])

print(rgbdimages.global_normal_map.shape) # torch.Size([2, 8, 240, 320, 3])

print('---')

# visualize

fig, ax = plt.subplots(1, 2)

ax[0].title.set_text('local normal map')

ax[0].imshow((rgbdimages.normal_map[-1, -1].numpy() * 255).astype(np.uint8))

ax[1].title.set_text('global normal map')

ax[1].imshow((rgbdimages.global_normal_map[-1, -1].numpy() * 255).astype(np.uint8))

plt.show()

torch.Size([2, 8, 240, 320, 3])

torch.Size([2, 8, 240, 320, 3])

torch.Size([2, 8, 240, 320, 3])

torch.Size([2, 8, 240, 320, 3])

---

Transfer between GPU/CPU¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

RGBDImages support easy transfer between CPU and GPU. This operation transfers all tensors in the RGBDImages objects between CPU/GPU.

[5]:

# initalize RGBDImages

rgbdimages = RGBDImages(colors, depths, intrinsics, poses)

if torch.cuda.is_available():

# transfer to GPU

rgbdimages = rgbdimages.to("cuda")

rgbdimages = rgbdimages.cuda() # equivalent to rgbdimages.to("cuda")

print(rgbdimages.rgb_image.device) # "cuda:0"

print('---')

# transfer to CPU

rgbdimages = rgbdimages.to("cpu")

rgbdimages = rgbdimages.cpu() # equivalent to rgbdimages.to("cpu")

print(rgbdimages.rgb_image.device) # "cpu"

cuda:0

---

cpu

Detach and clone tensors¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

RGBDImages.detach returns a new RGBDImages object such that all internal tensors of the new object do not require grad. RGBDImages.clone() returns a new RGBDImages object such that all the internal tensors are cloned.

[6]:

# initalize RGBDImages

rgbdimages = RGBDImages(colors.requires_grad_(True),

depths.requires_grad_(True),

intrinsics.requires_grad_(True),

poses.requires_grad_(True))

# clone

rgbdimages1 = rgbdimages.clone()

print(torch.allclose(rgbdimages1.rgb_image, rgbdimages.rgb_image)) # True

print(rgbdimages1.rgb_image is rgbdimages.rgb_image) # False

print('---')

# detach

rgbdimages2 = rgbdimages.detach()

print(rgbdimages.rgb_image.requires_grad) # True

print(rgbdimages2.rgb_image.requires_grad) # False

True

False

---

True

False

Channels first and channels last representation¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

RGBDImages supports both a channels first and a channels last representation. These representations can be transformed to one another with to_channels_first() and to_channels_last() methods.

[7]:

# initalize RGBDImages

rgbdimages = RGBDImages(colors, depths, intrinsics, poses)

print(rgbdimages.rgb_image.shape) # torch.Size([2, 8, 240, 320, 3])

print('---')

# convert to channels first representation

rgbdimages1 = rgbdimages.to_channels_first()

print(rgbdimages1.rgb_image.shape) # torch.Size([2, 8, 3, 240, 320])

print('---')

# convert to channels last representation

rgbdimages2 = rgbdimages1.to_channels_last()

print(rgbdimages2.rgb_image.shape) # torch.Size([2, 8, 240, 320, 3])

print('---')

torch.Size([2, 8, 240, 320, 3])

---

torch.Size([2, 8, 3, 240, 320])

---

torch.Size([2, 8, 240, 320, 3])

---

Visualization¶

*NOTE:* Make sure to have ran the prerequisits section before running this section.

For easy and quick visualization of RGBDImages, one can use the .plotly(batch_index) method:

[8]:

# initalize RGBDImages

rgbdimages = RGBDImages(colors, depths, intrinsics, poses)

# visualize

rgbdimages.plotly(0).show()